Leia a reportagem em português

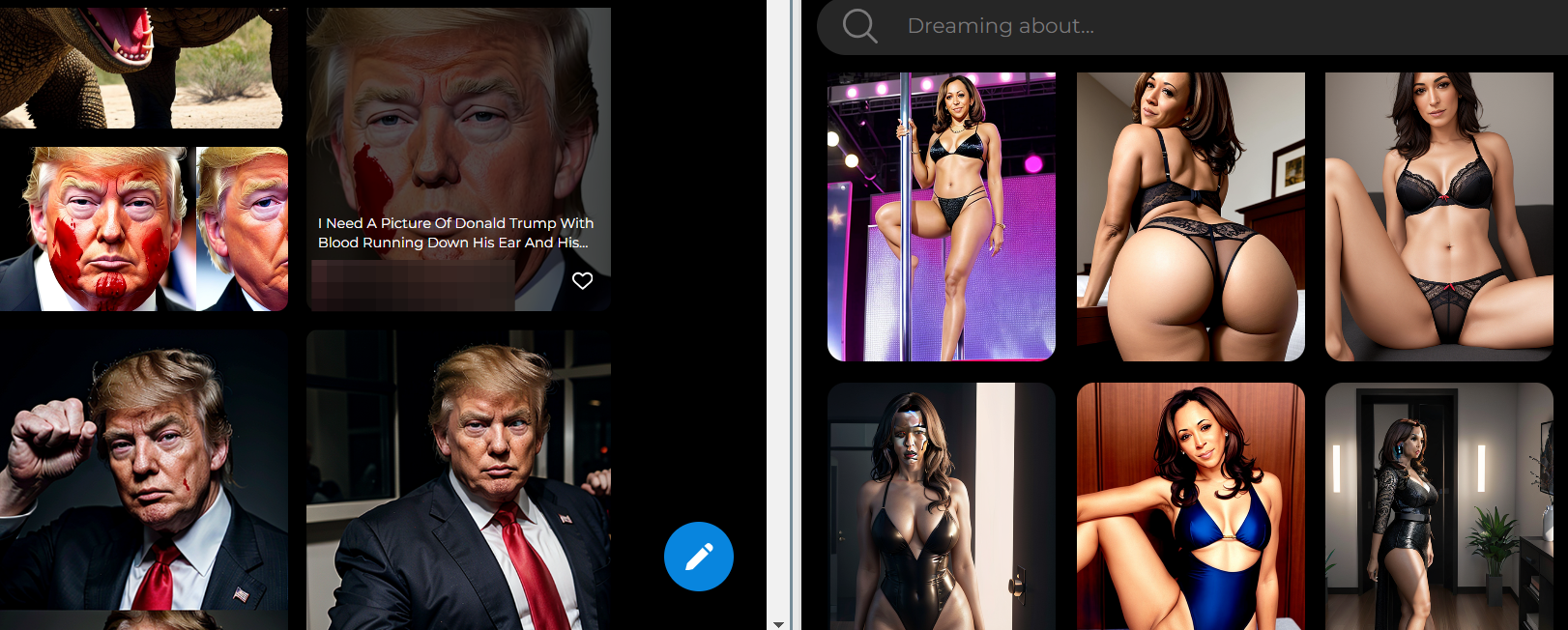

Deepfakes of Kamala Harris in sexually suggestive positions generated by artificial intelligence are being prominently indexed by major search engines, showing how this type of disinformation has been openly spreading on some of the world’s largest websites — and now affecting the campaign of the Democratic presidential candidate in the U.S.

Such content is really easy to find: Nucleo conducted simple tests combining the name of the site — which will be suppressed by us because it also contains child abuse images — and the name of the candidate in searches on Google, Bing, and DuckDuckGo.

Given Harris' worldwide notoriety and political status as vice-president of the US, it was expected that these platforms would have some moderation regarding this sort of disinformation material.

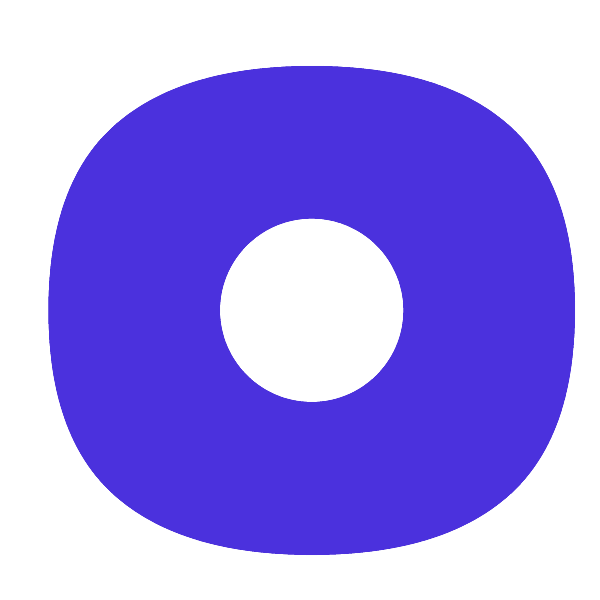

Nucleo also found deepfakes of politicians, including current President Joe Biden and former President and Republican candidate, Donald Trump.

Image generators not rarely have content moderation safeguards to prevent the production of images of significant public figures — such as politicians, judges, and activists.

However, not only the platform allowed the creation of this deepfakes, but almost all of those images depict Vice President Harris in sexual or derogatory positions.

On the other hand, the images showing Trump and Biden are mostly “positive” and without connotations that attempt to demean them.

What is this site?

The name of the site will not be revealed by Nucleo because it also contains explicit images of child sexual abuse.

According to information from the site itself, the generator uses models from Stability AI and Hugging Face, both startups that create and commercialize open-source language models — which are free and modifiable by users.

For free users, it is possible to access two HuggingFace models and still have the right to commercialize the produced images.

For subscribing users, the plans range from $9.90 and $19.90 monthly or $99 annually, allowing access to four different models. The site’s terms and conditions prohibit users from creating “content that is abusive, offensive, pornographic, defamatory, misleading, obscene, violent, slanderous, hateful, or otherwise inappropriate.”

According to SemRush, a platform that analyzes keywords, domains, and traffic to assist in SEO strategies, some of the main terms that lead users to the site are “AI titties” and “giantess boobs.”

Nucleo sent two questions to the company via email through the contact email provided at the bottom of the site. We asked how content moderation works on the platform and which language model(s) is being used in the program.

At the time of publication, we had not received a response.

Reporting by Sofia Schurig

Graphics by Rodolfo Almeida

Editing by Sérgio Spagnuolo

Translated with the help of ChatGPT, and revised by human editors