Receba nossas newsletters

É de graça. Só tem coisa fina, você vai curtir!

Leia esta reportagem em português.

Until recently, racial justice was apparently not on the list of concerns for the world's largest social media conglomerate.

It was only after the fallout from the murder of George Floyd by a police officer in the United States, in May 2020, and the subsequent strengthening of the #BlackLivesMatter movement, that Facebook employees internally raised their voices about the company's failures to support Black people and design more effective policies to advance the racial justice agenda.

The discussion about race unfolded in the months following the protests that erupted all around the U.S. and changed the way the company now analyzes demographic data, including race, as shown in several Facebook Papers documents seen by Núcleo in partnership with Folha de S. Paulo.

Up until then, employees from various teams would come up against a major obstacle: the lack of racial information about their users — justified by the platform as a privacy issue — prevented them from basing policies regarding this issue.

THIS MATTERS BECAUSE…

- Over the years, Facebook has been criticized for promoting algorithmic discrimination in its products, which has had detrimental effects on minorities on the platform

- It demonstrates that, driven by external events, the company can accelerate processes to make improvements and fix problems

The website The Intercept Brasil addressed racial issues in an article published on Dec. 1 — when Núcleo and Folha were already working on this investigation.

When discussions of racial justice gained traction among employees, the challenge was to effectively measure whether Black users were being disproportionately affected or disenfranchised by the algorithm — as Facebook itself had heard in surveys it conducted.

Besides this challenge, the absence of racial information was also a disadvantage for customers and partners.

Even without internal access to data on race, other companies working with Facebook data, such as advertisers, had found a way around the absence of this feature: using secondary indicators as proxies to micro-target ads for Black users, as The Markup reported.

In practice, this bypass means that while advertisers cannot directly select racial categories for the target audience, they can mark secondary categories that lead them to that audience.

In other words, solutions for finding data of such importance have been around for a while.

"A more cynical take is that part of why we avoid measuring race is because we don’t want to know what our platform is actually doing – particularly at Facebook, if you can’t measure, you can’t act," one employee wrote in a post.

In the same post, other employees commented that the Responsible AI and AI Policy teams were actively working on the issue to map out possible ways forward.

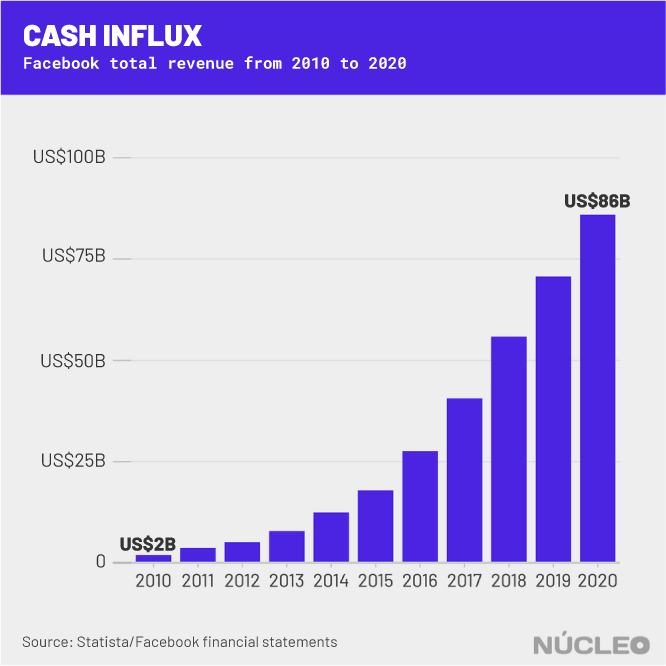

Resources abound. Facebook (now called Meta) owns the world's leading social networking apps, including Instagram and WhatsApp, used by billions of users.

The company reported total revenue of US$86 billion in 2020, a 22% growth over the previous year, while net income soared nearly 60% to US$ 29 billion.

In an email statement, Meta said the following:

"Our teams work every day to improve the experiences of marginalized communities using Instagram and Facebook. This work is constantly evolving based on the issues we encounter on our platforms and the feedback we receive from the community. In November, our Civil Rights team released a progress report detailing some of the company's progress from last year's Civil Rights Audit. For example, the report highlights our work in developing a review process for product teams in which we assess the potential civil rights implications of new products. A key part of this effort is our work with civil rights experts. For us, it is essential that all communities have positive and safe experiences on our platforms, and we will continue to work toward that goal."

"IF YOU DON'T MEASURE IT, YOU CAN'T ACT ON IT"

One work front that took shape amid the protests over Floyd's death dealt with the disproportionate harm that Black people suffered from content and profile moderation on platforms.

"Are we giving different communities of users the reporting options they need? Are different communities of users disproportionately being reported on? Having content taken down ? Having their accounts disabled? Having comments flagged?" were the questions asked by the person who created the post on the company's internal network, dated June 3, 2020.

The idea was to look at impacts on five different levels of the implementation of the community guidelines:

- policies;

- reporting;

- content removal;

- classifier detection;

- and account disabling.

But the lack of data was a major impediment to carrying out this work.

"It's hard to understand any part of this without the ability to look at data or a proxy for race. There could be self-identification by the user just for that internal and private purpose of making our product fairer, or we could study a sample of users with certain policy enforcement outcomes," wrote the person — whose name was withheld by the team handling the Facebook Papers.

On June 5, this same problem showed up in a discussion between other employees in a post in a forum called Integrity Ideas To Combat Racial Injustice.

"While we presumably don’t have any policies designed to disadvantage minorities, we definitely have policies/practices and emergent behavior that does." — UNIDENTIFIED FACEBOOK EMPLOYEE

"We should comprehensively study how our decisions and how the mechanics of the social network do or do not support minority communities," wrote the author of the post, who admitted that it would be difficult to make progress without measurements.

For the author, despite Facebook's good intention in not collecting data on users' race, the decision was problematic for two reasons:

- machine learning systems "are almost certainly able to implicitly guess the race of many users."

- many of the ideas discussed in the post are "extremely difficult to study without the possibility of conducting race-based analysis."

TEMPORARY SOLUTION THAT ENDURED

In September, a few months after discussions about racial justice began, a lengthy document entitled Enabling Measurement of Social Justice with U.S. Zip Codes was published.

With the picture of a friendly mailman bringing “Good News” in the header, the author of the document concludes that "to support Racial Justice projects, we need to know how metrics vary on race and ethnicity. But our commitment to privacy means we have to be extremely cautious about collecting or inferring race and ethnicity."

It is not clear which team or department wrote the document, but the text states that in order to allow product development in the short term, different teams should use location and Census data to measure their products by neighborhood, race, and ethnicity.

The idea would be to cross-reference data to estimate population levels in each location — as does the Brazilian Institute of Geography and Statistics (IBGE) Census, which was mentioned in comments on the post — to solve the problem temporarily. At least until the Responsible AI and AI Policy teams developed a solution alongside civil society actors that would take user's privacy into account.

Thus, privacy concerns, which would have previously prevented the company from collecting such data, would be resolved, given that this method would not allow for people to be measured, but rather locations and geographies.

What was supposed to be a temporary measure ended up being consolidated as a solution, publicly presented by Meta in mid-November.

In a report signed by researchers from the Artificial Intelligence team, the company presented three approaches to start measuring accurate race data for its users: two relating to the process that was already underway and a third based on users' self-declaration, without allowing Meta to see individual data.

Beyond the obstacles encountered by researchers within Meta, measuring data on race was one of the recommendations of the Civil Audit Report, a report produced over two years by an independent audit hired by the company after repeated criticism that its products were discriminatory.

Even so, the inference of racial data, as Meta intends to do now, requires a more human approach, points out Paulo Rená, activist at Aqualtune Lab, university professor, and doctoral student in Law.

"Facebook can infer people's race, it's not absurd to make mathematical inferences about a person who lives in a certain neighborhood, has a certain income and a certain last name," Rená told Núcleo.

"But we have to be careful with those inferences, because if I have an average that says that 90% of people in a group are a certain way, that doesn't determine that the person I'm analyzing is going to be part of that 90%, rather than the 10%," he explained.

According to the researcher, there is no safeguard or guarantee that Facebook will adopt this approach.

BREITBART

It was not only the absence of data that was flagged as a problem by employees. While the protests called for by #BlackLivesMatter were still taking place, far-right U.S. media outlets associated with former president Donald Trump started calling the protests riots and vandalism.

One such vehicle was Breitbart, a website that grew under the leadership of Trump's former campaign chief Steve Bannon and was featured on Facebook's News Tab.

On June 4, 2020, a Facebook employee made a post on Workplace (sort of a Facebook for businesses, used by the company itself) with the headline "Get Breitbart out of News Tab," with an image of the ultraconservative website's most recent headlines. "Do I need to explain this one?" he wrote in the caption. This was not the first time Breitbart had been the subject of controversy within the company.

In Oct. 2018 there was a discussion about allowing Breitbart as part of the platform's ad program. That is, companies and brands that used Facebook Ads would be able to have their ads show up on Breitbart's site, which generates money for the publication.

At the time, the person who posted the note said that allowing Breitbart to monetize through Facebook was a political statement and also bad for business since advertisers were blocking their ads from being displayed on that site.

But by June 2020, Breitbart and its news were yielding a profit for the company in the form of engagement.

An analysis by MediaMatters, a media research website in the United States, showed that posts about the #BLM protests from right-wing pages were receiving more engagement than posts from "neutral" or left-wing pages, concentrating 40% of all interactions, despite representing only ¼ of all posts about the protests.

"These articles are emblematic of a coordinated effort by Breitbart and similar hyperpartisan sources (none of which belong on the news tab) to paint Black Americans and Black-led movements in a very negative light," one person commented on the June 4 post.

"If our guidelines permit this kind of news source to be amplified as credible , then our guidelines are not ’fighting racial injustice , as the goal of the group is. If we are proposing big ideas, then my contribution in the hat is that our guidelines for credible news should not include news sources that are contributing to narratives that perpetuate racial injustice."- UNIDENTIFIED RESPONSE IN A POST ON THE INTERNAL FACEBOOK NETWORK.

For Indian researcher Ramesh Srinivasan, Harvard Ph.D. and founder of the Digital Culture Lab at UCLA, Meta's racial justice initiatives would benefit more from a collaborative look outward than from turning inward.

"Instead of more of a top-down approach that tries to use correlation to identify race, what Facebook should be doing is working with local and community organizations around the world. For example, organizations that work on civil rights for Black people," he said in an interview.

Part of the solution, in Srinivasan's point of view, is to give up moderation power.

"Facebook doesn't need to publish its source code, but it does need to cede the power of content moderation to many different organizations that have the expertise to understand racial issues, gender issues, trans queer issues, and also geographic issues," he said.

HOW WE DID IT

The information was obtained from documents disclosed to the U.S. Securities and Exchange Commission (SEC) and provided to the U.S. Congress in edited form by Frances Haugen's legal counsel, in what became known as the Facebook Papers. The redacted versions received by the U.S. Congress were reviewed by a consortium of news outlets.

Núcleo Journalism had access to the documents and publishes this report in partnership with Folha de S. Paulo.

These documents were first reported by the Wall Street Journal, which called the series the Facebook Files.