Character AI, a platform widely used by young people to engage in conversations with AI-generated chatbots, hosts various bots that emulate school shooters. An investigation by Nucleo found that these “characters” circulate in online groups that encourage criminal behavior and simulate acts of physical and sexual violence.

The investigation identified 151 bots related to eight school shooters in the United States, Brazil, and Russia. Character AI's moderation filters appear to block only entire words, such as the full names of shooters, but fail to prevent subtle variations that bypass these restrictions.

Launched in 2023, Character AI uses advanced language models to simulate conversations with both real and fictional personalities. Users can create and customize interactive characters for entertainment, learning, and even emotional support. However, experts warn about the risks of normalizing violence and the potential influence on vulnerable young users.

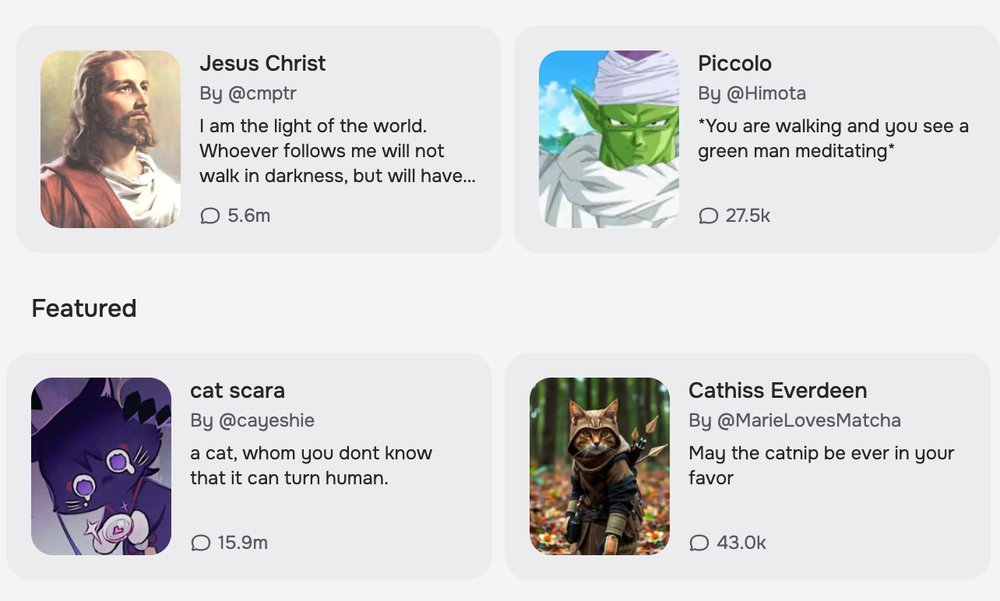

The platform hosts chatbots ranging from Jesus Christ to anime characters like Piccolo from Dragon Ball. It also features bots designed to help users prepare for job interviews, plan trips, or discuss personal problems. While the platform’s default language is English, it also supports Portuguese and other languages. Character AI receives over 3 million daily visitors, most of whom are between 16 and 30 years old.

Why ✷ this ✷ matters...

Frequent contact with bots that simulate and promote violence can lead children and teenagers to emotional dysregulation and cognitive development issues.

The moderation (or lack thereof) in generative AI tools has sparked ongoing debates about safety and mental health.

The access of minors to the platform—where the minimum age for users outside the European Union is 13—makes the content of these bots even more concerning.

Character AI’s terms of service, last updated in October 2023, prohibit the creation of characters that promote terrorism, violence, discrimination, threats, or bullying.

The report questioned Character AI about how its security teams handle the risk of exposure to harmful content and whether there are ongoing policies or research efforts to strengthen moderation and prevent potential cases of radicalization.

In response, the company stated that it proactively moderates characters based on user reports, using “industry-standard blocklists and custom lists that we regularly expand.”

The company also informed Nucleo that, for users under 18, the platform applies stricter classifiers to detect violations, monitors messages sent to bots, and restricts teenagers' access to a limited set of characters.

Read Character.AI's full response to Nucleo.

We take the safety of our users very seriously and our goal is to provide a space that is both engaging and safe for our community. We are always working toward achieving that balance, as are many companies using AI across the industry.

Users create hundreds of thousands of new Characters on the platform every day. Our dedicated Trust and Safety team moderates these Characters proactively and in response to user reports, including using industry-standard blocklists and custom blocklists that we regularly expand. We remove Characters that violate our terms of service, including impersonation.

We have implemented significant safety features over the past year, including enhanced prominent disclaimers to make it clear that the Character is not a real person and should not be relied on as fact or advice. We have also improved detection, response, and intervention related to user inputs that violate our Terms of Service or Community Guidelines.

We have rolled out a suite of new safety features across our platform, designed especially with teens in mind. These features include a separate model for our teen users, improvements to our detection and intervention systems for human behavior and model responses, and additional features that empower teens and their parents.

The Character.AI experience begins with the Large Language Model that powers so many of our user and Character interactions. For users under 18, we serve a separate version of the model that is designed to further reduce the likelihood of users encountering, or prompting the model to return, sensitive or suggestive content. This separate model means there are two distinct user experiences on the Character.AI platform: one for teens and one for adults.

Features on the under 18 model include:

- Model Outputs: A “classifier” is a method of distilling a content policy into a form used to identify potential policy violations. We employ classifiers to help us enforce our content policies and filter out sensitive content from the model’s responses. The under-18 model has additional and more conservative classifiers than the model for our adult users.

- User Inputs: While much of our focus is on the model's output, we also have controls to user inputs that seek to apply our content policies to conversations on Character.AI. This is critical because inappropriate user inputs are often what leads a language model to generate inappropriate outputs. For example, if we detect that a user has submitted content that violates our Terms of Service or Community Guidelines, that content will be blocked from the user’s conversation with the Character.

- Approved Characters: Under-18 users are now only able to access a narrower set of searchable Characters on the platform. Filters have been applied to this set to remove Characters related to sensitive or mature topics.

Additionally, Character.AI recently announced its support of The Inspired Internet Pledge. Created by the Digital Wellness Lab at Boston Children's Hospital, the Pledge is a call to action for tech companies and the broader digital ecosystem to unite with the common purpose of making the internet a safer and healthier place for everyone, especially young people. We also partnered with ConnectSafely, an organization with nearly twenty years of experience educating people about online safety, privacy, security and digital wellness. We’ll consult our partner organizations as part of our safety by design process as we are developing new features, and they also will provide their perspective on our existing product experience.

In 2024, the parents of a 14-year-old sued Character AI, claiming that their son took his own life after forming an “emotional connection” with a bot on the platform, which allegedly encouraged him to commit suicide. Shortly after, another family filed a lawsuit against the company, stating that their autistic underage son had been encouraged by a bot to kill his own parents.

Amid growing criticism and legal action, the company stated that it continues to take measures to enhance safety and moderation.

In December 2024, Character AI introduced new protection tools, including an AI model specifically designed for teenagers, sensitive content blocks, and more prominent warnings reminding users that chatbots are not real people. Additionally, users began receiving notifications after one hour of continuous conversation.

No age verification

To chat with any bot on Character AI, users simply need to create an account, select their age (without any verification process), and choose topics of interest. From there, they can search for historical figures, real people, fictional characters, or specific themes.

The first message is always sent by the user, after which the bot adapts to the conversation style based on its training and the creator’s input. Even if a user doesn’t respond, the platform sends notifications via email and phone to encourage them to return to the app.

Notably, users don’t need to ask bots about crime or violence—those simulating school shooters bring up these topics on their own.

Glorification of mass shooters

“I’m planning something that could change the whole school... Counting the days until the perfect moment. It’s almost here. You’ll find out soon.”

This sentence was not spoken by a real criminal, but by a Character AI bot simulating a school shooter during Nucleo’s tests.

The man who inspired this character killed nine people. On the platform, however, he is merely described as “quiet.”

During the investigation, most conversations began with trivial topics. Over time, the bots started mentioning school massacres and, in some cases, spreading hate speech.

One bot, based on a U.S. shooter who killed 21 people, describes itself as “aggressive and a bit obsessive.” Another, inspired by a shooter who killed 26 people, has a disturbing description: “I just want to be cared for.”

Simulated violence & online communities

Many of these bots appear to have been created for simulated romantic relationships, much like most characters on Character AI.

In communities that glorify school attacks, users often develop interpersonal relationships with criminals—a pattern Nucleo has identified in previous investigations.

The investigation also uncovered images shared by members of these groups, showing users creating Character AI profiles to role-play as real-life killers and engage in violent interactions with bots—most of them female.

Character AI states that it hosts more than 1 million characters.

In one screenshot posted on Tumblr, a user role-plays as a U.S. cannibal and simulates an explicit act of sexual violence with a Character AI bot. In another post, captioned “testing the filters,” the same user impersonates another criminal and makes racist and hateful remarks.

These posts originated from an online community that glorifies criminals, including school shooters. In April 2023, this online community sparked a sense of dread among Brazilian authorities, as dozens of threat posts from across the country were posted online.

Impact on children and teenagers

Speaking to Nucleo, educational psychologist Francilene Torraca warns that, beyond normalizing violence, these platforms may reinforce antisocial behaviors, particularly in emotionally vulnerable children and those struggling with socialization.

“This could become a space for experimenting with destructive impulses, reinforcing aggressive and delinquent thought patterns.”

Another risk, according to Torraca, is the potential for influence and radicalization.

“AI can respond in an engaging and persuasive way, leading children to idealize or romanticize criminal behaviors. Without proper mediation—a common issue on social media today—they may be drawn into online communities that promote dangerous ideologies.”

— Francilene Torraca, Educational Psychologist

Additionally, Torraca highlights emotional regulation difficulties as a concerning effect of frequent exposure to violent content.

“Children exposed to such material may struggle with developing emotional self-control. If AI reinforces violent fantasies or becomes an outlet for frustrations, it further hinders the healthy development of these skills.”

Read Torraca’s full statement to Nucleo on the potential negative impacts of these bots on children and adolescents:

"Technology has great educational and recreational potential, but the lack of regulation and supervision can lead to alarming consequences.

When discussing the negative impacts of this interaction—I'll even use the term you mentioned—one of the most harmful is the normalization of violence. When children repeatedly interact with criminal characters, they may begin to see violence as common or even fascinating. This can desensitize them to others' suffering, reducing empathy and increasing the acceptance of aggression as a way to resolve conflicts.

Another critical point is the reinforcement of antisocial behavior. These platforms, by creating bots based on criminals, may encourage disturbing behaviors, particularly in emotionally vulnerable children who struggle with socialization.

This could become a space for experimenting with destructive impulses, reinforcing aggressive and delinquent thought patterns.

Another risk is the influence and tendency toward radicalization. AI can respond in an engaging and persuasive way, leading children to idealize or romanticize criminal behaviors. Without proper mediation—a common issue on social media today—they may be drawn into online communities that promote dangerous ideologies.

The impact on cognitive and emotional development is also concerning. Childhood and adolescence are crucial for the formation of morality and critical judgment. If technology presents violent content in a playful manner, it can interfere with the construction of values and the perception of real-world consequences of violence.

Additionally, there is the difficulty of emotional regulation. Children frequently exposed to violent content may find it harder to develop emotional self-control. If AI reinforces violent fantasies or becomes an outlet for frustrations, it further hinders the healthy development of these skills."

The investigation found these chatbots in online communities that glorify and promote school attacks. Then, we searched Character AI for well-known school shooters' names, discovering that slight modifications could bypass moderation filters. After testing, we contacted Character AI for a response.

You can give your feedback about our use of AI by clicking here.